Johnny Mnemonic (1995)

That was the danger Samuel Butler jestingly prophesied in Erewhon,

the danger that the human being might become a means

whereby the machine perpetuated itself and extended its dominion.

—Lewis Mumford[1]

Futures made of virtual insanity

Now always seem to be governed by this love we have

For useless twisting of our new technology

Oh, now there is no sound for we all live underground

—Jamiroquai[2]

From the Telegraph to the Television

In the 1992 science fiction film The Lawnmower Man, a mentally challenged groundskeeper named Jobe becomes hyper-intelligent though experimental virtual reality treatments. As his intellect evolves, he develops telepathic and telekinetic abilities. By the end of the movie, Jobe transforms into pure energy, no longer requiring his physical form and completely merging with the virtual realm, claiming that his “birth cry will be the sound of every phone on this planet ringing in unison.”[3]

Dr. Angelo, the scientist who oversees the experiments, works for Virtual Space Industries, a research lab that is funded by a nefarious agency called The Shop. The Shop manipulates Jobe’s treatments to include “aggression factors” so the project can be used for military purposes. When the experiments go awry, Dr. Angelo shrieks at Jobe: “This technology was meant to expand human communication, but you’re not even human anymore! What you’ve become terrifies me—you’re a freak!”[4] As expected, the promise of advanced cognitive capabilities that virtual technology offered at the beginning of the film was quickly usurped by the crooked corporation by the end of the film, causing harm to both the individual and the scientific potential of the groundbreaking research.

The Lawnmower Man (1992)

The Lawnmower Man displayed some impressive computer graphics (similar in style to the 1982 movie Tron), but it was not a good film by any other meaningful cinematic standards. But what is memorable, however, is that it accurately captured the tense relationship between techno-optimism and techno-cynicism that reigned in the 1990s.[5] On one hand, technology at the end of the twentieth century seemed to hold considerable promise for humankind. The launch of the world wide web allowed people around the globe to access an unprecedented amount of information on every possible subject.[6] Satellite technology and cellular phones gave people the ability to communicate farther and faster than ever before.[7] Advances in genetics and artificial intelligence offered possibilities in a variety of areas, from curing disease to accelerating human evolution.[8] Even new computer technology provided the potential for improving filmmaking and other creative endeavors.[9]

On the other hand, these technologies came with a host of downsides, especially when they were advanced under military or corporate control. The web should have allowed for the democratization of information, but instead, internet giants like Google and Facebook manipulated algorithms to increase advertising revenues, not access to quality information.[10] Social media ensured that individuals could stay in touch with each other more than ever before, but the format increased addictive and performative behaviors, consumerism, echo chambers, and, ironically, loneliness and isolation.[11] Computer generated imagery (CGI) and digital filming did not live up to the hype, and in most cases harmed the aesthetic experience more than helped it.[12] And the scientific achievements that promised to extend and better human life became funded and studied for their use in war or profit-making.[13]

Despite its corniness, The Lawnmower Man captured this dichotomy by comparing the promises of the virtual world against the pitfalls of capitalism, and how advanced technologies could become unmanageable despite scientists’ best intentions. Jobe predicted that “virtual reality will grow, just as the telegraph grew to the telephone, as the radio to the TV; it will be everywhere.”[14] While prescient, The Lawnmower Man was just one of many films during this time that captured this sensibility. For cinema, the 1990s marked the era of special-effects blockbusters versus low-budget indies, many of which grappled with ideas about techno-optimism versus techno-cynicism, whether subtly or explicitly. Despite being considered a cheesy decade for film, nineties cinema revealed profound social and political anxieties that stemmed from an era of globalization and cultural change precipitated by late capitalism and cutting-edge technology. For all the hopes that these new technologies were supposed to bring, nineties cinema exposed the skepticism and fears that lurked beneath the optimistic surface.

Technology at the Century’s Turn: Savior or Destroyer?

The late twentieth century was rich with the idea that nineties technology would make the world a better place. Techno-utopians argued that the ability to spread information around the world at lightning speeds was akin to spreading the Enlightenment into the darkest, most backward corners of the Earth. The impact of the web was frequently compared to the impact of the printing press.[15] This new unprecedented access to information meant that medical breakthroughs could spread to where they were needed most, business could be conducted without storefronts, people could connect like never before.

But as early as 1992, some cultural theorists were not as optimistic. In his well-circulated article in the Atlantic, “Jihad vs. McWorld,” political theorist Benjamin Barber criticized the prevalent views on technology’s possibilities. Barber recognized that the technological breakthroughs of the 1990s paved the way for “open communication, a common discourse rooted in rationality, collaboration, and an easy and regular flow and exchange of information.”[16] However, concerned about the political implications of globalization, Barber noted how this new global technology leads to a “homogenization and Americanization” of culture, and that it “lends itself to surveillance as well as liberty, to new forms of manipulation and covert control as well as new kinds of participation, to skewed, unjust market outcomes as well as greater productivity.”[17]

The same year, cultural theorist Neil Postman shared similar concerns. In his book Technoloply, Postman compared the nineties techno-optimism to a kind of religious surrender, where “culture seeks its authorization in technology, finds its satisfactions in technology, and takes its orders from technology.”[18] Rather than having a democratizing effect, Postman claimed that these new technologies lead to “knowledge monopolies,” where the knowledge that would be disseminated to the masses would necessarily be the prescribed knowledge of the ruling classes who owned it.[19] In other words, government and corporate control mitigated the potential of nineties technologies, and these scientific innovations became tools for social and economic control.

Barber and Postman were but two individuals from a larger group of concerned cultural theorists who some derogatorily called the “neo-Luddites.”[20] Techno-optimists accused these techno-cynics of grumpily resisting the ways of the future, yet these condemnations did not accurately reflect their grievances. It was not a resistance to change that motivated critics to voice concern. Rather, it was the way that these technologies could be easily used for social manipulation and control under a capitalist system.[21] Although these assessments echo those by earlier critics of technology, the collective scientific innovations of the late twentieth century far surpassed the capabilities of technology’s past, creating a unique set of possibilities and challenges that these earlier critics may have imagined but were now becoming a reality.

Science on the Big Screen

While cultural theorists and scientists debated these concerns, it was through cinema that these ideas more widely extended to the general public. Nineties films not only showcased futuristic technologies (many that became actualized), but they grappled with its possible pitfalls. Take for example one futuristic technology that appears frequently in nineties cinema: the self-driving car. Always appearing as a background prop, the autonomous car represented an innovation of the near future while showing its inescapable failures. Robotic cars showed up in many science fiction films at the time: Total Recall (1990), Demolition Man (1993), The Fifth Element (1997), Minority Report (2002). In each instance of its appearance, the machine that is supposed to make life a little bit easier inevitably causes problems. For example, during an intense action scene in Total Recall, Douglas Quaid jumps into the Johnny Cab, yelling at the robot driver to drive away, but the smiling robot cabbie does not comprehend his command and insists that Douglas give him a destination. When Douglas sees the enemy approaching the car, he exclaims, “Shit! Shit!” which just causes the cabbie to calmly state, “I’m not familiar with that address.”[22] Enraged, Douglas rips the robot out of the driver’s seat and proceeds to drive the car manually, which is the same kind of reaction that every other protagonist in these movies had as well. When it mattered, the self-driving car was just a hindrance, and the characters in these films always had to take over the driving themselves.

Total Recall (1990)

The hesitancy to accept self-driving technology was rooted in real concerns about the functionality and safety of these machines. In 1991, the U.S. spent over $600 million dollars researching the possibilities of a fully automated highway system.[23] Despite advances in navigation mapping and sensory perception, self-driving cars still posed technical and even ethical challenges. Driving is more than just the efficiency of the technology involved—driving requires complex, split-second decision-making. While self-driving cars can assess distances and impact of hindrances in their immediate environment, they cannot be relied upon to make the correct choice when presented with an ethical dilemma.[24] International surveys revealed that robotic cars tend to make utilitarian calculations that differ drastically than the kinds of decisions that would be made by a human, and even human moral choices vary wildly.[25] I, Robot (2004) explored this idea as Detective Spooner explains his distrust for machine decision-making when a robot saved his life instead of saving the life of a young girl: “I was the logical choice. It calculated I had a 45 percent chance of survival; Sarah only had an 11 percent chance. That was somebody’s baby. Eleven was more than enough. A human would have known that. But robots, no, they’re just lights and clockwork. But you know what: You go ahead and trust them if you wanna.”[26]

The distrust of machines like self-driving cars was representative of a larger suspicion towards artificial intelligence (AI) in general, a recurring theme in nineties films. AI appeared in movies as early as Metropolis in 1927, and self-aware robots in cinema have become household names throughout every decade of cinema history: Gort in the 1950s, HAL 9000 in the 1960s, R2‑D2 and C-3PO in the 1970s, Johnny Five in the 1980s. But films in the 1990s interacted with the concept of AI a bit differently because many of the scientific advances in AI were actually happening in real time.

Throughout the 1960s, scientists expressed a lot of interest in studying robotics and machine intelligence. But by the 1970s, researchers faced significant funding cuts and did not have the necessary computing power to make many advances in the field.[27] However, the 1980s saw the rise of “expert systems,” a computer system that uses expert knowledge and logical reasoning to make complex decisions. Expert systems were really the first viable form of AI software.[28] By the 1990s, the computing advances achieved a decade earlier combined with innovations in the field of cybernetics and with research done by cognitive scientists to produce “intelligent agents,” autonomous entities that can sense their environment, make independent decisions, and even learn and self-adjust.[29] Intelligent agents dominated AI research in the 1990s and continued into the 2000s.

Artificial intelligence promised an array of possibilities, but many hesitated to welcome this new technology with open arms. People have always been wary of “soulless machines,” but the idea of them had been relegated to the realm of science fiction for most of human history. Yet, in the 1990s, intelligent robots were no longer found solely in literary hypotheticals—they were becoming real. And this frightening new reality revealed itself in many of the era’s popular movies.

The Terminator films provide the most obvious example of AI as a technology to be feared. In Terminator 2: Judgment Day (1990), the more advanced Terminator, the T-1000, is shown as highly adaptable and nearly indestructible.[30] T-1000 could instantly repair itself through the use of nanotechnology, a kind of technology that relies upon molecular manipulation (the altering of bacteria, cells, DNA, etc.). The concept of nanotechnology was introduced in the late 1950s by respected physicist Richard Feynman, but molecular engineer Kim Eric Drexler elaborated on the idea extensively in the late 1980s.[31] By the time Terminator 2 arrived in theaters, nanotechnology had become a vibrant field that promised faster computers and regenerative medicine. However, with its potential also came suspicion. Many feared that the manipulation of tiny molecules and even tiny machines (nanobots) could lead to everything from uncontrollable computer viruses to environmental or biological disaster.[32] These fears were even explored in the 1989 Star Trek episode “Evolution,” where nanites (nanobots) replicated themselves and evolved at a rapid pace, producing nitrogen dioxide as a defense mechanism and altering the computer’s core.[33]

Terminator 2: Judgment Day (1991)

The T-1000’s self-repairing abilities were scary enough, but it could also accurately mimic a human’s voice and appearance, making it not only difficult to kill, but nearly impossible to detect its true form. This ability is best remembered in the scene where the T-1000 shapeshifts into John Connor’s foster mom and tricks him by copying her voice on the phone (the scene also uses impressive computer graphics that showcased new film technology, producing strikingly realistic visuals).[34] What is even more frightening about Terminator 2 is that this T-1000 is just one machine out of a possible many that Skynet, the overarching artificial intelligence neural network, created to defend itself against the human threat. So, not only is this particular T-1000 terrifying, but knowing the source of its existence hints at a larger, lurking danger of AI, expanding its reach and taking control of the human world.

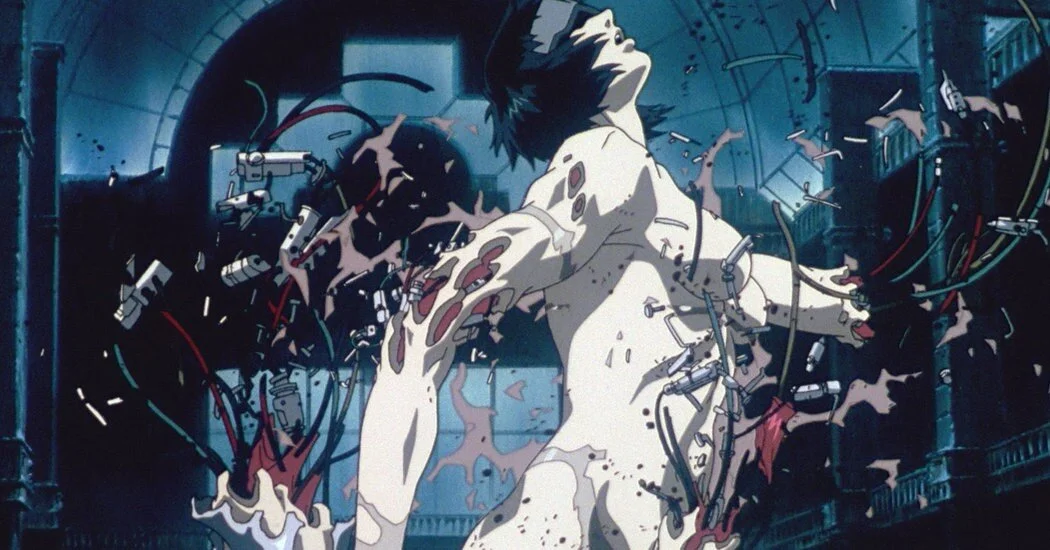

As the possibilities of artificial intelligence became more advanced in the 1990s, cinema began to explore deeper philosophical questions that AI raised, specifically those related to identity and personhood. Take for instance the 1995 movie Ghost in the Shell. The film is set in a futuristic world where technology has advanced such that the human body can be augmented with cybernetic parts, especially with the “cyberbrain” (referred to as the “ghost”), which allows one’s consciousness to link to the internet. The main character, the Puppet Master, is a ghost trapped inside a cybernetic body (the “shell”), and he grapples with the mind–body problem, a philosophical dilemma that seeks to understand the relationship that consciousness and the physical self has on identity.[35] As the neuroscience field expanded in the 1990s (due largely in part to better imaging technology), academics revisited the mind–body problem with fresh eyes.[36] Philosophers such as Daniel Dennett and David Chalmers revived this debate as cybernetic bodies and brains were becoming a real possibility.[37] Simultaneously, transhumanism, a movement that sought to improve human existence through technology, gained momentum in the 1990s because of these possibilities.[38] On one hand, critics viewed the blending of humans with robots as rife with ethical problems for identity and personhood. On the other hand, optimists saw the merging of man with machine as a positive evolutionary step. The position presented in Ghost in the Shell is ambiguous, but the Puppet Master does declare at one point: “Your effort to remain what you are is what limits you.”[39]

Ghost in the Shell (1995)

This desire to alter one’s physical makeup went beyond cybernetics, however. In the late 1980s and 1990s, the field of genetics experienced a series of important breakthroughs, causing people to consider the potential that could come from altering one’s DNA. In 1985, geneticist Alec Jeffreys revealed the method for DNA fingerprinting, a method for identifying a person’s unique DNA characteristics, allowing scientists to isolate disease-causing genes with the hopes of altering or repairing them.[40] A couple years later, molecular biologist Yoshizumi Ishino discovered the DNA sequence of Clustered Regularly Interspaced Short Palindromic Repeats (CRISPR), which are DNA sequences found within unicellular organisms like bacteria. The discovery of CRISPR opened the door for scientists to find solutions for issues within the fields of medicine, agriculture, and geology.[41] A series of other important discoveries in genetics occurred during this time, everything from new knowledge about the enzymatic activities of RNA to the identification of specific gene abnormalities, like that of cystic fibrosis and breast cancer. By 1990, genetic research had garnered such wide-scale interest that scientists formed the Human Genome Project, an international research project with the intention of mapping all the genes in the human genome (successfully completed in 2003). The Human Genome Project promised benefits in an array of fields, most specifically the potential to cure cancer and other biological disorders.[42]

But the one genetic breakthrough that most people living in the nineties would immediately recall is the cloning of the sheep Dolly. Dolly was the first mammal to be successfully cloned from a somatic cell, a technique allowing an identical genetic clone to be produced.[43] It was not long after the cloning of Dolly that scientists began to clone other mammals as well (pigs, dogs, etc.). Almost immediately, debates about human cloning emerged. Scientists promised to create a human clone within a few years, but the general public feared this notion, and the practice was even outlawed in various places around the world.[44] In 1998, scientists fulfilled their promise and created the first successful human clone, but they destroyed the embryo after a couple weeks of viability.[45] Indeed, the notion of cloning was no longer science fiction—it was now a reality that had to be grappled with.

Public awareness about genetics and cloning became visible in many movies of the 1990s. Species (1995), 12 Monkeys (1995), Multiplicity (1996), The Island of Dr. Moreau (1996), Alien: Resurrection (1997), and Habitat (1997), to name a few, all showcased dystopian fears about the science of genetics, cloning, or molecular manipulation gone wrong. The most notable of this kind to deal with these issues head-on was the 1997 film Gattaca (its name derived from the four nucleobases of DNA: Guanine, Adenine, Thymine, Cytosine).[46] The people living in this futuristic world are created through eugenics where preferred genetic traits determine one’s fate in the larger societal structure. Vincent Freeman was conceived naturally without genetic engineering, but he wished to become an astronaut despite his genetic inferiority. Even though employment discrimination in this world is technically illegal, companies still use genetic profiling to aid their hiring decisions, effectively allowing a backdoor for discriminatory practices to prevail anyway. Vincent stated, “I belonged to a new underclass, no longer determined by social status or the color of your skin. Now we now have discrimination down to a science.”[47] These fears displayed in popular culture bled into real-life politics, causing Congress to pass the Genetic Information Nondiscrimination Act (GINA) in 2008, a law that makes it illegal for employers or health insurers to discriminate based on one’s genes.[48] Gattaca keenly captured the tensions between the techno-optimists, who viewed genetic science as a way to rid society of unwanted diseases and traits, versus the techno-skeptics, who viewed genetic science as a precursor to a kind of dystopian genetic determinism. As the tagline for the film reminded viewers, “There is no gene for the human spirit.”[49]

Gattaca (1997)

Most remembered, perhaps, is the role that genetic research played in Jurassic Park (1993). A day before the film’s release, Nature published an important article about the successful isolation of DNA from a 120-million-year-old weevil fossilized in amber.[50] This field of ancient DNA research formed the basis for Jurassic Park’s storyline, yet the field would have likely remained unknown by the general public if not for its exploration within the film. Jurassic Park even included an animated film-within-a-film feature called “Mr. DNA Sequence” that educated the audience on the basic genetic knowledge needed to accept dinosaur clones as a real possibility.[51] The studio’s use of innovative special effects, including being one of the first films to blend CGI with live action (just as Terminator 2 did a couple years prior), added to the sense of realism needed to keep an outlandish premise in the realm of the probable. As expected, the film ultimately criticized scientific hubris, all while capitalizing on the public’s natural fears about molecular manipulation.

Living in the Virtual Realm

Debates about artificial intelligence and genetics reigned in the 1990s, but perhaps no technological breakthrough stimulated such impassioned responses as did the internet. People divided themselves into two camps: those who felt the internet would democratize information, increase communication, and improve daily life versus those who felt the internet would lead to information monopolies, decrease meaningful social interaction, invade privacy, and reduce self-sufficiency. Time Magazine claimed that the internet was “about community and collaboration on a scale never seen before.”[52] Perhaps unsurprisingly, internet optimists tended to be those in STEM fields, standing to benefit the most financially from promoting the internet’s charms. Typically, the techno-optimists sold the internet as a technological messiah, or as Google CEO Eric Schmidt put it, “If we get this right, I believe we can fix all the world’s problems.”[53]

Techno-skeptics were less quick to share their faith. Novelist Jonathan Franzen, one of the internet’s earliest critics, noted how “technovisionaries of the 1990s promised that the internet would usher in a new world of peace, love, and understanding,” and that this attitude persists today: “Twitter executives are still banging the utopianist drum, claiming foundational credit for the Arab Spring. To listen to them, you’d think it was inconceivable that eastern Europe could liberate itself from the Soviets without the benefit of cellphones, or that a bunch of Americans revolted against the British and produced the U.S. Constitution without 4G capability.”[54]

This snark prompted accusations of luddism, but Franzen and his ilk denied those charges, instead asserting that blind faith in this new technology was foolhardy. During the height of MySpace popularity, social scientists began observing effects that the internet, specifically social media, had on online behavior, noting that the format led to a kind of performative culture where individuals adjust their identity in order to project a certain image of themselves. Journalist Lakshmi Chaudhry said that the web’s “greatest successes have capitalized on our need to feel significant and admired and, above all, to be seen.”[55] Cultural critic Emily Nussbaum explored the ways that the internet obliterated any sense of privacy, especially as teenagers documented and broadcasted every aspect of their adolescence in the virtual world.[56] And tech writer Nicholas Carr argued that overreliance on web searches diminished human ability to research properly and read deeply. “Never has a communications system played so many roles in our lives—or exerted such broad influence over our thoughts—as the Internet does today,” Carr stated, “Yet, for all that’s been written about the Net, there’s been little consideration of how, exactly, it’s reprogramming us.”[57]

These tensions still exist, but during the nineties, the internet had not yet become the behemoth that it is today. The virtual realm was still novel and mysterious, so the perceptions of it portrayed in cinema were a mix of hopes and fears. Take for instance the notion of hacking. Drawing largely from cyberpunk themes of the 1980s, movies portrayed hackers both as members of a suspicious subculture and as youthful rejects possessing the skills required for a strange new techno-future. This duality is seen in many hacker films of the 1990s, especially the 1995 film Hackers, which tells the story of teenage hackers who get involved in a corporate conspiracy.[58] The film quotes “The Conscience of a Hacker,” a real-life manifesto that demanded hackers use their skills for good by making information available to the public.[59] Despite their criminal activity, hackers, according to the manifesto, were really the secret superheroes of the internet, using their skills to expose corporate and military greed and deception. As the manifesto itself explains: “We explore and you call us criminals. We seek after knowledge and you call us criminals. We exist without skin color, without nationality, without religious bias and you call us criminals. You build atomic bombs, you wage wars, you murder, cheat, and lie to us and try to make us believe it’s for our own good, yet we’re the criminals.”[60]

Hackers (1995)

Mindwarp (1992), Brainscan (1994), Johnny Mnemonic (1995), Strange Days (1995), The Net (1995), Virtuosity (1995), Webmaster (1998), and eXistenZ (1999) are just a handful of the films from this era that deal with hackers, cybercrime, and virtual reality. But arguably the most memorable nineties film from this genre is The Matrix (1999). The Matrix drew upon the visuals and themes of previous dystopian films like Ghost in the Shell, Hard Boiled (1992), and Dark City (1998); philosophies of Plato, Zhuangzi, and René Descartes; the novels of Lewis Carroll and William Gibson; and a collection of religious ideas to create a hodgepodge science fiction blockbuster with cutting-edge special effects. The story follows a hacker named Neo who discovers that intelligent machines have enslaved humans in a simulated reality, and he must battle agents, computer programs within the matrix that police the system.[61] The movie touches on all the nineties technological fears that have already been mentioned, but it functions largely as an allegory for the internet.

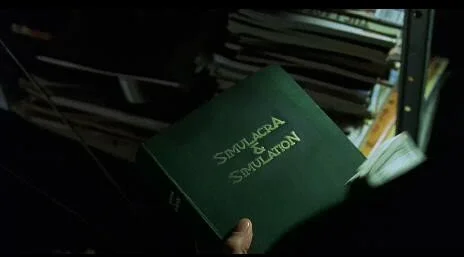

As a parable for late twentieth-century technology, the philosophy behind the film (and a good portion of the script as well) relied largely upon the works of Jean Baudrillard, especially his book Simulacra and Simulation, which was required reading for the actors.[62] Written in 1981, Simulacra and Simulation predicted the ways that the media and internet culture would obscure human understanding of reality.[63] Because of the way the internet feeds people a constant stream of information and images, humans can no longer differentiate between the real world and the simulated world, and instead, they become merely a reflection—a “pure screen” as Baudrillard puts it—that absorbs all this excessive data.[64] Audiences would notice this concept best in one of the film’s early scenes, where Neo is asleep as the computer blasts non-stop images onto his face.[65] The same scene visually sets up the key idea behind the film as Neo opens up his own copy of Simulacra and Simulation to the chapter “On Nihilism,” where Baudrillard notes, “The universe and all of us have entered live into simulation.”[66]

The Matrix (1999)

Baudrillard’s ideas fit into the television and videogame culture of the 1980s, but by the 1990s—with the proliferation of internet advertising, 24-hour news, and the beginnings of social media—his ideas proved visionary. The internet did indeed become a vehicle for non-stop information and images that blurred the line between the real and the virtual, and by the 2000s, people would spend more time online than in the actual world. But what is more relevant to his ideas in The Matrix is Baudrillard’s notion of the “triumph of the object.” For Baudrillard, humans are no longer the subjects who control the world of objects. Rather, this new technological world inverts this relationship: the objects now control the subjects, or, as Henry David Thoreau famously put it: “We do not ride on the railroad; it rides upon us.”[67] This is the case in The Matrix where people have been turned into batteries—a mere energy source for the machines that were initially built by humans. Like the internet, the technology becomes more powerful than the humans who originally created it and eventually controls every aspect of human life.

The Machines or the Money?

The Matrix captured many of the concerns expressed by techno-skeptics: the blending of man and machine, the uncontrollability of artificial intelligence, and the supremacy of the web. But another reading of the film, and others like it, is a critique of the dominance of capitalism. Humans are just batteries in The Matrix—pure commodities for the machines that enslave them. Most of them reside in a simulation that is just livable enough that they are not spurred to revolt against the system that entraps them. Capitalism, especially in its late form, functions in the same way as the matrix. Agent Smith describes the ingenuity of this system:

Have you ever stood and stared at it, marveled at its beauty, its genius? Billions of people just living out their lives, oblivious. Did you know that the first matrix was designed to be a perfect human world, where none suffered, where everyone would be happy? It was a disaster. No one would accept the program; entire crops were lost. Some believed we lacked the programming language to describe your perfect world, but I believe that, as a species, human beings define their reality through misery and suffering. The perfect world was a dream that your primitive cerebrum kept trying to wake up from, which is why the matrix was redesigned to this, the peak of your civilization. I say “your civilization” because as soon as we started thinking for you, it really became our civilization, which is, of course, what this is all about. Evolution, Morpheus, evolution. Like the dinosaur. Look out that window. You’ve had your time. The future is our world, Morpheus. The future is our time.[68]

The matrix thus represents the current reality for most, infiltrating every aspect of human life, overarching and unescapable. It is important to remember that the few within the film who became enlightened to their state were indeed few—most people remained stuck in the simulated world. In fact, this is the case with most nineties’ dystopian films: the heroes are usually outcasts or rejects in some way who have tapped into a larger truth that eludes most people. And this trope is not limited to films that dealt with science or technology, either. The nineties were an era for the weirdos and the losers and the slackers who did not fit in the larger neoliberal worldview. This “slacker culture” became a key feature of Generation X angst, but, at its core, the slacker mentality was a low-key resistance to capitalism.[69] In other words, the classic films that captured the Gen X personality—Slacker (1990), Wayne’s World (1992), Clerks (1994), Fight Club (1999), Office Space (1999)—portrayed characters who rejected the expectation to fit into the capitalist schema. This trait is the same as the John Connors and the Neos and all the others who wished to break free from the ominous reality that oppressed them.

Was it the technology itself that posed a problem for techno-skeptics? Or was it the fear that the technology was a conduit for the dangers of capitalism? For the optimists, nineties technology offered the promise of being able to improve society: better health, higher IQs, simpler lives—everything faster and easier and better. But for the skeptics, when these technologies inevitably fell under the control of corporations or the military, they ran the risk of becoming domineering and treacherous. Submission to these technologies did not just mean adapting to changing times—it meant relinquishing one’s humanity. As the scientific innovations of the 1990s happened in real time, the movies captured these tensions between the techno-optimists and techno-skeptics. Only time will tell how accurate these films really were.

Footnotes:

[1] Lewis Mumford, Technics and Civilization (Chicago: University of Chicago Press, 1934), 301.

[2] Jamiroquai, “Virtual Insanity,” Travelling Without Moving (New York: Columbia Records, 1996), song.

[3] The Lawnmower Man, dir. Brett Leonard (Burbank: New Line Cinema, 1992), film.

[4] Ibid.

[5] This essay considers events and phenomena within the long nineties, the period from the late 1980s to the early 2000s. Additionally, many of the films from this era drew upon science fiction stories from the 1960s and 1970s. What is unique about them in the 1990s, however, is that the technological innovations were no longer a part of science fiction imagination—they were engaging with actual scientific breakthroughs that occurred in the late twentieth century. So, even though some of the storylines in these films were not original, the technological issues they showcased were based on contemporary science, thus giving these movies new meaning for the nineties.

[6] Katie Hafner and Matthew Lyon, Where Wizards Stay Up Late: The Origins of the Internet (New York: Touchstone, 1996), 257–258; Johnny Ryan, A History of the Internet and the Digital Future (London: Reaktion Books, 2010), 105–107.

[7] Guy Klemens, The Cellphone: The History and Technology of the Gadget That Changed the World (Jefferson: McFarland & Company, 2010), 3–4.

[8] Siddhartha Mukherjee, The Gene: An Intimate History (New York: Scribner, 2016), 201–326.

[9] Christopher Finch, The CG Story: Computer-Generated Animation and Special Effects (New York: Monacelli Press, 2013), 99–111.

[10] Kalev Leetaru, “The Perils to Democracy Posed by Big Tech,” Real Clear Politics, November 16, 2018; Farhad Manjoo, “Which Tech Overlords Can You Live Without?” The New York Times, May 11, 2017, B1; Robert Epstein and Ronald Robertson, “The Search Engine Manipulation Effect (SEME) and Its Possible Impact on the Outcomes of Elections,” Proceedings of the National Academy of Sciences 112, no. 33 (2015): 12–21.

[11] Sujeong Choi, “The Flipside of Ubiquitous Connectivity Enabled by Smartphone-Based Social Networking Service: Social Presence and Privacy Concern,” Computers in Human Behavior 65, no. 1 (2016): 325–333; Brian Primack, et al., “Social Media Use and Perceived Social Isolation Among Young Adults in the U.S.,” American Journal of Preventative Medicine 53, no. 1 (2017): 1–8; Michael Pütter, “The Impact of Social Media on Consumer Buying Intention,” Journal of International Business Research and Marketing 3, no. 1 (2017): 7–13; Mohammad Salehan and Arash Negahban, “Social Networking on Smartphones: When Mobile Phones Become Addictive,” Computers in Human Behavior 29, no. 6 (2013): 2632–2639; Ofir Turel and Alexander Serenko, “The Benefits and Dangers of Enjoyment with Social Networking Websites,” European Journal of Information Systems 21, no. 5 (2012): 512–528.

[12] David Christopher Bell, “6 Reasons Modern Movie CGI Looks Surprisingly Crappy,” Cracked, May 12, 2015; Michael James, “10 Reasons Why CGI Is Getting Worse, Not Better,” Rocketstock, June 9, 2015.

[13] “Military Work Threatens Science and Security,” Nature 556, no. 1 (2018): 273.

[14] The Lawnmower Man, film.

[15] James Dewar, “The Information Age and the Printing Press: Looking Backward to See Ahead,” RAND Corporation P-8014 (1998): 1–30.

[16] Benjamin Barber, “Jihad vs. McWorld,” The Atlantic, March 1992, 54.

[17] Ibid., 55.

[18] Neil Postman, Technopoly: The Surrender of Culture to Technology (New York: Vintage Books, 1992), 71–72.

[19] Ibid., 9.

[20] Steven Jones, Against Technology: From the Luddites to Neo-Luddism (New York: Routledge, 2006), 19–20.

[21] For an extended discussion on 1990s technology and its critics, see generally: James Brook and Iain Boal, eds., Resisting the Virtual Life: The Culture and Politics of Information (San Francisco: City Lights, 1995); Kirkpatrick Sale, Rebels Against the Future: The Luddites and Their War on the Industrial Revolution (New York: Perseus Publishing, 1995); Clifford Stoll, Silicon Snake Oil: Second Thoughts on the Information Highway (New York: Doubleday, 1995).

[22] Total Recall, dir. Paul Verhoeven (Hollywood: Carolco Pictures, 1990), film.

[23] Matt Novak, “The National Automated Highway System That Almost Was,” Smithsonian Magazine, May 16, 2013.

[24] Jean-François Bonnefon, Azim Shariff, Iyad Rahwan, “The Social Dilemma of Autonomous Vehicles,” Science 352, no. 6293 (2016): 1573–1576; Boer Deng, “Machine Ethics: The Robot’s Dilemma,” Nature 523, no. 7558 (2015): 25–26.

[25] Amy Mazmen, “Self-Driving Car Dilemmas Reveal That Moral Choices Are Not Universal,” Nature 562, no. 7728 (2018): 469–470.

[26] I, Robot, dir. Alex Proyas (Los Angeles: Davis Entertainment, 2004), film.

[27] Nils Nilsson, The Quest for Artificial Intelligence: A History of Ideas and Achievements (New York: Cambridge University Press, 2010), 123–202.

[28] Ibid., 205–222.

[29] Ibid., 460–462; Pamela McCorduck, Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence (Natick: A K Peters, 2004), 341–342.

[30] Terminator 2: Judgment Day, dir. James Cameron (Hollywood: Carolco Pictures, 1991), film.

[31] For an introduction to the concept of nanotechnology, see: Richard Feynman, “There’s Plenty of Room at the Bottom: An Invitation to Enter a New Field of Physics,” American Physical Society, December 29, 1959, lecture. For an elaboration of nanotechnology into a field during the 1990s, see generally: K. Eric Drexler, Engines of Creation: The Coming Era of Nanotechnology (New York: Doubleday, 1986); K. Eric Drexler, Nanosystems: Molecular Machinery, Manufacturing, and Computing (Hoboken: John Wiley & Sons, 1992).

[32] Nick Bostrom, Superintelligence: Paths, Dangers, Strategies (New York: Oxford University Press, 2014), 114–125.

[33] “Evolution,” Star Trek: The Next Generation, dir. Winrich Kolbe (Hollywood: Paramount Domestic Television, 1989), television.

[34] Terminator 2: Judgment Day, film.

[35] Ghost in the Shell, dir. Mamoru Oshii (Tokyo: Production I.G., 1995), film.

[36] For a detailed history on the field of neuroscience, see generally: Mitchell Glickstein, Neuroscience: A Historical Introduction (Cambridge: MIT Press, 2014); Andrew Wickens, A History of the Brain: From Stone Age Surgery to Modern Neuroscience (New York: Psychology Press, 2015).

[37] The 1990s saw a revival of debates about the mind–body problem. As cognitive scientists gained new ways to observe the brain, philosophers picked up the discussion about consciousness, identity, and the mind. Key figures in this ongoing debate were neuroscientists Francis Crick and Christof Koch, who sought to reclaim the debate from philosophers and argued that science was the key to solving the mystery of consciousness, suggesting that neural components could be found within the physical brain. Neurobiologist Gerald Edelman and neurologist Oliver Sacks also vocalized their views on the mind–body problem in the early 1990s, proposing that consciousness arises from neurons reacting to stimuli. At the same time, philosophers Colin McGinn, David Chalmers, and Daniel Dennett debated the level to which humans can even observe consciousness from an empirical standpoint. For a comparison of these 1990s debates from a philosophical perspective, see: David Chalmers, The Conscious Mind: In Search of a Fundamental Theory (New York: Oxford University Press, 1996); Daniel Dennett, Consciousness Explained (Boston: Back Bay Books, 1991). For a broad history of these debates that burst forth in the 1990s, see generally: Stanislas Dehaene, Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts (New York: Penguin Books, 2014); Annaka Harris, Conscious: A Brief Guide to the Fundamental Mystery of the Mind (New York: HarperCollins, 2019).

[38] Transhumanism is a movement that seeks to push human evolution beyond its biological capacity through the use of scientific and technological modification. The movement began in 1990 when futurist Max More published his essay “Transhumanism: Towards a Futurist Philosophy” online. In 1992, More joined Tom Morrow and established the Extropy Institute, an organization that arranges conferences on the topic of cyberculture. Transhumanism grew out of debates among postmodern theorists about the potential to transition out of old paradigms regarding what qualifies as human nature. Key to this idea was the philosophies of Donna Haraway, who viewed this transition optimistically. Not all theorists, however, would see the blending of man with machine as a positive step. For more on transhumanism, see: Nick Bostrom, “A History of Transhumanist Thought,” Journal of Evolution and Technology 14, no. 1 (2005): 1–30; Donna Haraway, “A Cyborg Manifesto: Science, Technology, and Socialist-Feminism in the Late 20th Century,” in Simians, Cyborgs and Women: The Reinvention of Nature (New York: Routledge, 1991), 149–181; James Hughes, Citizen Cyborg: Why Democratic Societies Must Respond to the Redesigned Human of the Future (Cambridge: Westview Press, 2004).

[39] Ghost in the Shell, film.

[40] Jay Aronson, Genetic Witness: Science, Law, and Controversy in the Making of DNA Profiling (New Brunswick: Rutgers University Press, 2007), 7–10.

[41] Jennifer Doudna and Samuel Sternberg, A Crack in Creation: Gene Editing and the Unthinkable Power to Control Evolution (New York: Harcourt Publishing, 2017), 117–120.

[42] Heidi Chial, “DNA Sequencing Technologies Key to the Human Genome Project,” Nature Education 1, no. 1 (2008): 219.

[43] Ian Wilmut, Keith Campbell, and Colin Tudge, The Second Creation: Dolly and the Age of Biological Control (Cambridge: Harvard University Press, 2001), 3–12.

[44] Ibid., 273.

[45] “The First Human Cloned Embryo,” Scientific American, November 24, 2001.

[46] Gattaca, dir. Andrew Niccol (Hollywood: Columbia Pictures, 1997), film.

[47] Ibid.

[48] The Genetic Information Nondiscrimination Act of 2008, U.S. Equal Employment Opportunity Commission.

[49] Gattaca, film.

[50] Raúl J. Cano, et al., “Amplification and Sequencing of DNA from a 120–135-Million-Year-Old Weevil,” Nature 363, no. 6429 (1993): 536–538.

[51] Jurassic Park, dir. Steven Spielberg (Universal City: Universal Pictures, 1993), film.

[52] Lev Grossman, “You—Yes, You—Are Time’s Person of the Year,” Time Magazine, December 25, 2006.

[53] Evgeny Morozov, “The Perils of Perfection,” The New York Times, March 3, 2013, 1340L.

[54] Jonathan Franzen, “What’s Wrong with the Modern World,” The Guardian, September 14, 2013, 4.

[55] Lakshmi Chaudhry, “Mirror, Mirror on the Web,” The Nation, January 11, 2007, 2.

[56] Emily Nussbaum, “Say Everything,” New York Magazine, February 12, 2007, 24–29, 102–103.

[57] Nicholas Carr, “Is Google Making Us Stupid?” The Atlantic, July/August 2008, 60.

[58] Hackers, dir. Iain Softley (Beverly Hills: MGM Studios, 1995), film.

[59] Loyd Blankenship, “The Conscience of a Hacker,” Phrack Magazine, January 8, 1986.

[60] Ibid.

[61] The Matrix, dir. Lana Wachowski and Lilly Wachowski (Burbank: Warner Bros. Entertainment, 1999), film.

[62] The actors were also required to read the following books that dealt with machine systems and the development of the human mind: Dylan Evans, Introducing Evolutionary Psychology (Flint: Totem Books, 2000); Kevin Kelly, Out of Control: The New Biology of Machines, Social Systems, and the Economic World (New York City: Perseus Books, 1994).

[63] Jean Baudrillard, Simulacra and Simulation, trans. Sheila Glaser (Ann Arbor: University of Michigan Press, 1994, orig. 1981), passim.

[64] Jean Baudrillard, America (London: Verso Books, 1988), 27.

[65] The Matrix, film.

[66] Baudrillard, Simulacra and Simulation, 159. When interviewed about the film, Baudrillard noted that The Matrix more closely resembled Plato’s Allegory of the Cave than his ideas because there is a clear separation between the virtual world and the real world in the film. However, Baudrillard argues that the postmodern condition blurs this distinction, and that the real issue is the fact that humans can no longer tell the difference between the real and the virtual—they have converged to become the hyperreal. See also: Aude Lancelin and Jean Baudrillard, “The Matrix Decoded: Le Nouvel Observateur Interview with Jean Baudrillard,” International Journal of Baudrillard Studies 1, no. 2 (2004): 1–4.

[67] Jean Baudrillard, Fatal Strategies, trans. Phil Beitchman (New York: Semiotext(e), 1990, orig. 1983), 25–44; Henry David Thoreau, Walden and Other Writings (New York: Random House, 1992, orig. 1854), 87.

[68] The Matrix, film.

[69] Even though Gen Xers were an incredibly independent and hard-working generation, Boomers often derogatorily called them “slackers” because they misinterpreted their distrust of economic institutions and disgust for blatant consumerism as laziness. See also: Margot Hornblower, “Great Xpectations of So-Called Slackers,” Time Magazine, June 09, 1997, 58.

By Shalon van Tine